🛠️ Surviving a Real-World Host Crash with Proxmox HA

On April 7th, 2025, my 2-node Proxmox cluster experienced a true failure event: one node dropped off the network due to a hardware issue mid-operation. What could have resulted in downtime and investigation instead triggered a smooth failover, verified by logs, and confirmed within ~30–45 seconds of VM unavailability.

This post is a breakdown of what failed, how Proxmox HA handled it, how I monitored it with WhatsUp Gold, and what makes a 2-node cluster work reliably — all backed by actual logs and automation using the Proxmox API.

🔧 The Setup

- 2 Proxmox nodes: pve01 and pve02

- ZFS replication for critical VMs

- NFS shared storage for others

- QDevice (Raspberry Pi) as a third quorum voter

- Linux bonding (no LACP) on dual 10Gbps NICs

- WhatsUp Gold VM used as the primary monitored application

- Proxmox API + PowerShell integration to auto-add VMs to WhatsUp Gold

This environment was built from scratch, using GenAI to learn along the way — from HA setup to scripting discovery of the Proxmox VMs and integrating them with WhatsUp Gold.

🚨 What Triggered the HA Event?

The crash was rooted in a NIC failure within a Linux bonding interface. From the journalctl logs on pve01:

Apr 07 18:19:23 pve01 pmxcfs[3555]: [status] notice: node lost quorum

Apr 07 18:19:23 pve01 kernel: pstore: Using crash dump compression: deflate

Apr 07 18:23:39 pve01 kernel: ixgbe 0000:04:00.1 enso50: NIC Link is Down

Apr 07 18:23:42 pve01 kernel: ixgbe 0000:04:00.1 enso50: link status down, disabling slave

This shows the node’s bonded NIC interface (ens50) went down hard, breaking quorum. The root cause appears to be a faulty Gigabit interface converter (GBIC). Without the QDevice, this would have caused HA to stall or deadlock.

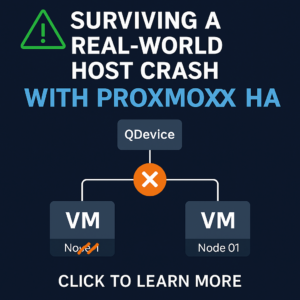

🔑 Why QDevice Is Essential

I know how essential it is because I tried this environment without one first. The same fault happened before I added the QDevice, and everything broke. When Proxmox suggest never doing a two node only cluster, they mean it. It will not work with the QDevice! If you have >2 nodes, you do not have to worry about a QDevice.

So what happened? When pve01 lost quorum, only two nodes remained in the cluster. Without a QDevice, Proxmox’s HA logic wouldn’t have had enough votes to elect a new leader or act. But because we had a QDevice, pve02 maintained majority and safely initiated recovery.

This small external voter (in our case, a Raspberry Pi) is critical in a 2-node Proxmox environment.

🧠 What HA Did Next

The following log entries from pve02 showed HA responding within seconds:

Apr 07 23:21:36 pve02 pve-ha-lrm[2494762]: <root@pam> starting service vm:117

Apr 07 23:21:37 pve02 pve-ha-lrm[2494764]: <root@pam> starting service vm:118Two critical VMs were affected:

- VMID 118 (ZFS + replication)

- VMID 117 (NFS shared disk)

Let’s look at their real recovery time.

⏱️ Verifying VM Recovery Time via Logs

We checked Windows Event Viewer logs inside the guest VMs to see when services started running again.

| VM ID | Storage | HA Start Time | Windows Service Start Time | Recovery Time |

| 118 | ZFS (2 disks) | 23:21:37 | 23:22:11 | 34 seconds |

| 117 | NFS (1 disk) | 23:21:36 | 23:22:19 | 43 seconds |

Even with two disks on VM 118, ZFS replication delivered a faster recovery than NFS. These logs confirm that the WhatsUp Gold service was back online in well under a minute.

🗂️ Architecture Summary

- Proxmox VE 8.3 on both nodes

- 2x 10Gb bonded NICs per node (no switch-side LACP)

- 1x QDevice Raspberry Pi running corosync-qnetd

- ZFS + replication (10 Gbe) for VM 118

- NFS (2 GBe) for VM 117

- WhatsUp Gold Windows Server 2022 VM

- PowerShell + Proxmox API to auto-discover VMs for monitoring

🔍 Monitoring VMs with the Proxmox API

Instead of customizing SNMP agents, which I learned is possible through this experiment, I used the Proxmox API + WhatsUpGoldPS PowerShell module to:

- Authenticate to the cluster

- Retrieve the VM guest data (IP, resource usage, tags, HA state)

- Dynamically push into WUG as monitored devices

Example: Guest Discovery PowerShell Snippet

$results = foreach ($node in $nodes) {$vms = Invoke-RestMethod -Uri "$ProxmoxHost/api2/json/nodes/$node/qemu" ...

foreach ($vm in $vms) {

# Get config, IP, resource metrics

}

}Each discovered VM was validated, filtered for valid IPv4, and added to WhatsUp Gold with active and performance monitors attached.

🤖 Built with GenAI, Tuned in Real-Time

🧠 What GenAI Helped Me Learn and Do

🔁 Cluster & HA Infrastructure

- ✅ Understand Proxmox HA behavior (HA groups, service states, recovery priorities, failover logic)

- ✅ Set up a QDevice safely to avoid split-brain and ensure quorum in a 2-node cluster

- ✅ Recover from a real-world HA failover, with a successful reboot and service restoration analysis

- ✅ Analyze HA logs to verify automatic VM relocation, timing, and behavior during a fault

🌐 Networking Deep Dive

- ✅ Correctly configure Linux bonding with

balance-rroractive-backupin the absence of LACP on the switch - ✅ Diagnose flaky bonded NIC behavior and its implications on cluster health, quorum, and availability

- ✅ Compare network configs across nodes to isolate subtle BIOS or firmware differences

🔐 Security and Certificates

- ✅ Safely rotate Proxmox SSL certificates without breaking HA, the cluster, or API auth

- ✅ Fix PowerShell script authentication issues with both classic (

ServicePointManager) and Core (SkipCertificateCheck) certificate validation bypasses

📦 Storage Strategy and Design

- ✅ Compare ZFS replication vs. NFS shared storage in HA failover timing

- ✅ Observe real-world behavior of ZFS-based VM recovery, including faster boot time with more disks

- ✅ Mount and explore VMFS partitions, even recovering data creatively without native tooling

🖥️ Monitoring and Scripting Automation

- ✅ Create a PowerShell integration using the Proxmox API to dynamically discover VMs and feed them into WhatsUp Gold

- ✅ Extend WUG device creation via REST API, including handling SNMP credentials, performance monitors, and tagging

- ✅ Add custom attributes per VM, indexed by ID, and structured for future SNMP extensions

- ✅ Validate guest IP addresses via qemu-guest-agent, correctly parsing nested response structures

- ✅ Use API-based monitoring instead of legacy SNMP polling, including tagging, device skipping, and duplication checks

🔍 Diagnostics and Troubleshooting

- ✅ Perform root cause analysis (RCA) using Proxmox logs, kernel messages, and HA manager output

- ✅ Validate reboot triggers, including reading

journalctlfor NIC down events, watchdog timeouts, and HA responses - ✅ Check exact VM uptime & service start times inside Windows, correlated with HA logs

- ✅ Compare disk and VM configurations across nodes, leading to better replication strategies

📊 Presentation & Documentation

- ✅ Create an architecture diagram of your Proxmox cluster with proper labeling (qdevice, WUG, HA behavior)

- ✅ Write a full-length blog post suitable for WordPress, Markdown, or HTML

- ✅ Break down the setup into reproducible steps, even for others who want to build this

- ✅ Export everything in multiple formats, including zip/HTML for import into documentation platforms

🧾 Final Thoughts

The final product is a stable, monitored Proxmox HA cluster with intelligent automation and real-world recovery proof.

- A flaky bonded NIC caused a node to crash

- QDevice saved the day by maintaining quorum

- HA failover of WhatsUp Gold completed in ~30–40 seconds

- Logs from both Proxmox and Windows confirm full-service restoration

- Even with two disks, ZFS + replication performed better than NFS

- Monitoring built with Proxmox API + PowerShell, no SNMP required

- GenAI made it all faster and more confident

This wasn’t just an experiment. This was real HA, real failure, real recovery — in production.

🏗️ Want to Build This Yourself? Step-by-Step Guide

This project isn’t just theoretical — it’s a fully functional, resilient cluster that you can build in a weekend (or a day, if you’re caffeinated enough). Here’s a breakdown of the major steps involved to recreate what you saw in this blog:

✅ Prerequisites

- 2 identical or similar Proxmox-capable hosts

- 1 small system for QDevice (a Raspberry Pi is perfect)

- Shared network switch (LACP not required)

- Optional: shared NFS or SMB storage (or local ZFS if you go replication-only)

🧱 Step-by-Step Build Process

🔹 Step 1: Acquire Hardware

- Minimum 2 servers, preferably 3 (dual NICs for bonding ideal)

- 1 Raspberry Pi or low-power Linux device for QDevice (only for 2-node clusters)

- SSD or NVMe drives (especially for ZFS pools)

- Optional: external NAS for NFS

🔹 Step 2: Install Proxmox VE 8.x

- Download the ISO from Proxmox Downloads

- Install to the servers

- Important note: they do not suggest installing to SD Card, but I used it in my case to maximize the ZFS pools storage space and RAID level

- Complete basic setup with static IPs and FQDNs

🔹 Step 3: Join Both Nodes into a Cluster

Run on one node (master):

pvecm create cluster-nameThen on the other node:

pvecm add <IP-of-first-node>Verify with:

pvecm status🔹 Step 4: Configure QDevice (for quorum)

On the Pi or 3rd system:

sudo apt install corosync-qnetd

sudo systemctl enable --now corosync-qnetdBack on Proxmox:

pvecm qdevice setup <IP-of-QDevice>Validate:

pvecm statusYou should now see 3 total quorum voters (2 nodes + 1 QDevice).

🔹 Step 5: Configure Networking

Use Linux bonding (not OVS) for simplicity:

- Bond ens49 + ens50

- Mode: balance-rr or active-backup if no switch config

- Bridge the bond to vmbr0

This allows high-speed, redundant 10GbE connectivity without needing LACP. Your available options will vary.

🔹 Step 6: Create ZFS Pools

ZFS offers replication and snapshots for HA. Create with:

zpool create zfspool mirror /dev/sdX /dev/sdYThen add in Proxmox GUI under Datacenter > Storage.

🔹 Step 7: Set Up Shared NFS Storage

Mount your NFS or SMB shares and configure them in:

Datacenter > Storage > Add > NFS

🔹 Step 8: Enable HA

- Add VMs to the HA group

- Enable “Managed by HA”

- Use the GUI or run:

ha-manager add vm:<vmid>Configure priority/recovery behavior as needed.

🔹 Step 9: Install Guest Agent in VMs

For IP discovery, install the QEMU guest agent:

Linux:

sudo apt install qemu-guest-agent

sudo systemctl enable --now qemu-guest-agentWindows: Install from the official ISO, mount it from within Proxmox or add to your template image

🔹 Step 10: Integrate Monitoring

Install SNMP + monitor with Proxmox API:

- Install net-snmp on hosts

- Use the Proxmox REST API + WhatsUpGoldPS PowerShell module

- Automatically register all VMs for monitoring

You can find the full automation script at the end of this blog.

🔚 That’s It!

Once HA and QDevice are in place, and VMs are replicated or running on shared storage, you’ll have a resilient, auto-failover cluster with real-world validation.

Update 2025-04-13 NAS with NFS for VMs died (lock/halt), recovered from backups before the array finished initializing after reboot attempt. VM on ZFS + replication did not miss a beat. WUG360 caught the issue and alerted me. My ‘main’ WUG Server was on the NFS storage that died!